Okay, pretty big update with a pretty huge fix. And with this fix I guess I will proudly wear the "dunce" hat.

The video is pretty much non-nonsensical since it was my brain being blown by how the game manages key frames. Which I will attempt to explain here.

As stated multiple times, the game's MOTN format is effectively a binary-encoded dope sheet. And it does this by encoding different pieces of information into different blocks. (1) The bone id's, (2) the number of in between frames, (3) and specifically which frames. The part where I got stuck was the block after that (4) which describes a list of bits to describe how to read the (5) key frame values.

So to explain my folly, I'll start with what I adapted from PhilzYeah. And mentioned several times back since

this post.

Code:

node.axis.forEach(d => {

let index = 0;

do {

let keyframeBlockType = this.MEM.view.getUint8(this.MEM.ofs);

b4++;

this.MEM.ofs++;

if (keyframeBlockType & 0x80) {

node[d][index].high = true;

}

if (keyframeBlockType & 0x40) {

node[d][index].low = true;

}

index++;

if (keyframeBlockType & 0x20) {

node[d][index].high = true;

}

if (keyframeBlockType & 0x10) {

node[d][index].low = true;

}

index++;

if (keyframeBlockType & 0x08) {

node[d][index].high = true;

}

if (keyframeBlockType & 0x04) {

node[d][index].low = true;

}

index++;

if (keyframeBlockType & 0x02) {

node[d][index].high = true;

}

if (keyframeBlockType & 0x01) {

node[d][index].low = true;

}

index++;

} while (index < node[d].length);

});

Basically say we have a list of frames, for each frame we need to figure out what values are going to be read for each frame. The way the game does this is by declaring a list of bits, where a high bit reads a pair value and a low bit reads a single value. So I took that to mean that a high bit means the game reads an easing, left / right slope value, and a low bit reads a key frame value.

And the implementation I use to interpret that was as follows.

Code:

node.axis.forEach(d => {

node[d].forEach(frame => {

if (frame.high) {

let a = this.MEM.view.getUint16(this.MEM.ofs, true);

a = this.API.decodeFloat16(a);

this.MEM.ofs += 2;

let b = this.MEM.view.getUint16(this.MEM.ofs, true);

b = this.API.decodeFloat16(b);

this.MEM.ofs += 2;

frame.easing = [a, b];

}

if (frame.low) {

let a = this.MEM.view.getUint16(this.MEM.ofs, true);

a = this.API.decodeFloat16(a);

if (node.type === 'rot') {

a *= 65536;

a = a % (Math.PI * 2);

}

this.MEM.ofs += 2;

frame.value = a;

}

delete frame.high;

delete frame.low;

});

});

I will take a moment for clarification to specify that the game doesn't actually use this implementation. The game actually uses a look up table for each value in the bit field. But the values in the look up table, are simply the number of values being read from the specific bits being set. So those can be derived and the use of the look up table is not actually required. Actually I would say if anything the use of the look up table is actually more work and I'm not sure why it exists to begin with, but I digress.

The problem with this was a pretty common mistake when it comes to reverse engineering in that this wasn't actually the complete story. And I'll explain. Generally with reverse engineering the difference between stuff that works and stuff that doesn't work is pretty extreme. If something doesn't work you will generally get out of bounds issues, or errors, or really weird values. And you will know very quickly when something is broken. On the other side, when something works, it is generally when it is correct. And in this case with this implementation, my parser would read to the end of the blocks exactly as expected, and the values being produced where realistic and reasonable.

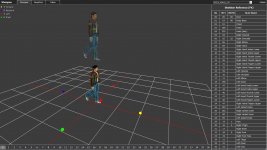

That being said, Lemonhaze, who actively looks at the game code kept telling me that my interpretation was incomplete, much to my confusion. Until I came up with a way to test out his claims, which is the video above which led me to finally being able to correct this. And the confusion arises with how the game hands bitflags.

In my brain, bit flags are set to specify one thing and one thing only. A single true false statement. So if high bit, read two easing values, if low bit read one key value. But this is

not how the game interprets the bitflags. The way the game interprets the bit flags is more like the following.

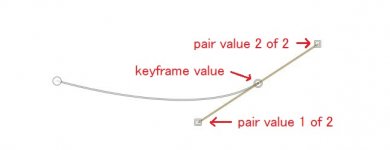

- If high bit is set, read two values

- If low bit is set, read one value

- If high and low bit are set, read three values

And this leads to why my brain was blown in the youtube video as I realized when the game was reading only the high bit, but was actually reading a key value as well. And this allowed me to go back and discuss with LemonHaze and update the implementation. So the correct way to interpret these bitflags is shown with the following code.

Code:

node.axis.forEach(d => {

node[d].forEach(frame => {

if (frame.high && frame.low) {

let a = this.MEM.view.getUint16(this.MEM.ofs, true);

a = this.API.decodeFloat16(a);

this.MEM.ofs += 2;

let b = this.MEM.view.getUint16(this.MEM.ofs, true);

b = this.API.decodeFloat16(b);

this.MEM.ofs += 2;

let c = this.MEM.view.getUint16(this.MEM.ofs, true);

c = this.API.decodeFloat16(c);

this.MEM.ofs += 2;

frame.easing = [a, b];

if (node.type === 'rot') {

c *= 65536;

c = c % (Math.PI * 2);

}

frame.value = c;

} else if (frame.high) {

let a = this.MEM.view.getUint16(this.MEM.ofs, true);

a = this.API.decodeFloat16(a);

this.MEM.ofs += 2;

let b = this.MEM.view.getUint16(this.MEM.ofs, true);

b = this.API.decodeFloat16(b);

this.MEM.ofs += 2;

frame.easing = [a];

if (node.type === 'rot') {

b *= 65536;

b = b % (Math.PI * 2);

}

frame.value = b;

} else if (frame.low) {

let a = this.MEM.view.getUint16(this.MEM.ofs, true);

a = this.API.decodeFloat16(a);

this.MEM.ofs += 2;

if (node.type === 'rot') {

a *= 65536;

a = a % (Math.PI * 2);

}

frame.value = a;

}

delete frame.high;

delete frame.low;

});

});

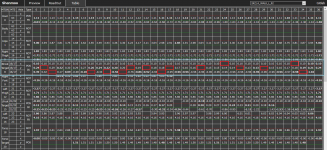

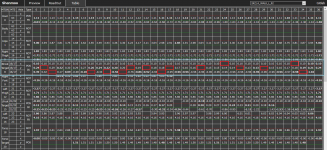

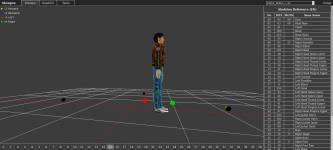

With this, we finally have a realistic view of all of the game's motion keyframe values. When only the low bit is set, we read the keyframe value. When only the high bit is set, we read a slope value and the key frame value. And then when both the high and low bits are set, we read two slope values and then the keyframe data. And effectively fills in the table where there were a massive number of blank slots. Which means now we can have pretty massive confidence in the output from the parser. And solves a lot of mysteries I had with the code previously. Which means that what remains generally boils down to implementation.